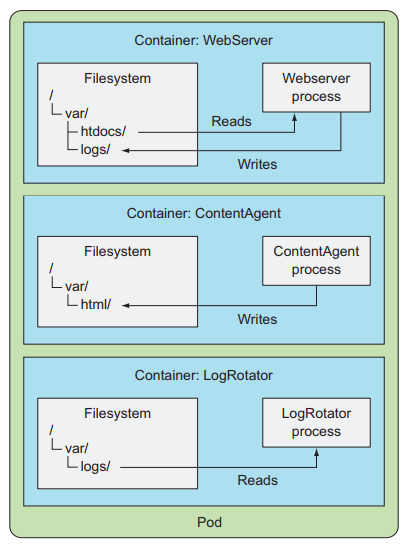

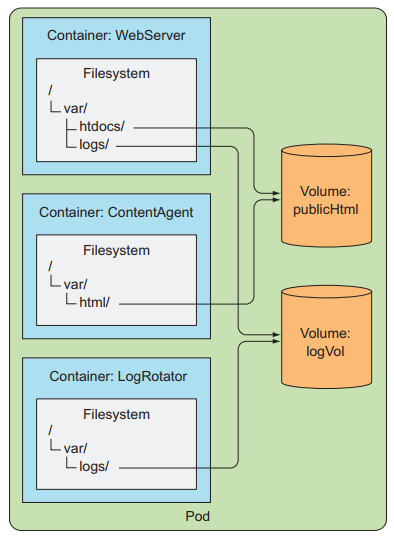

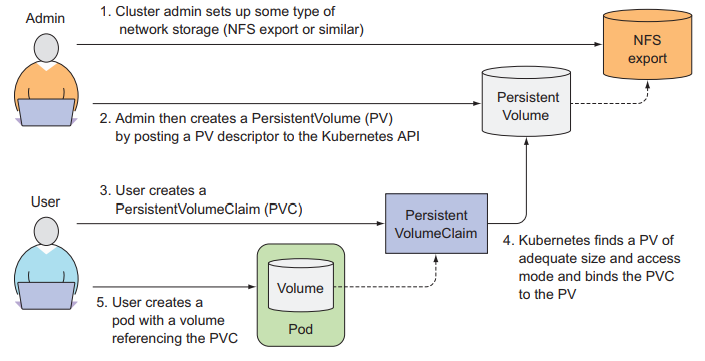

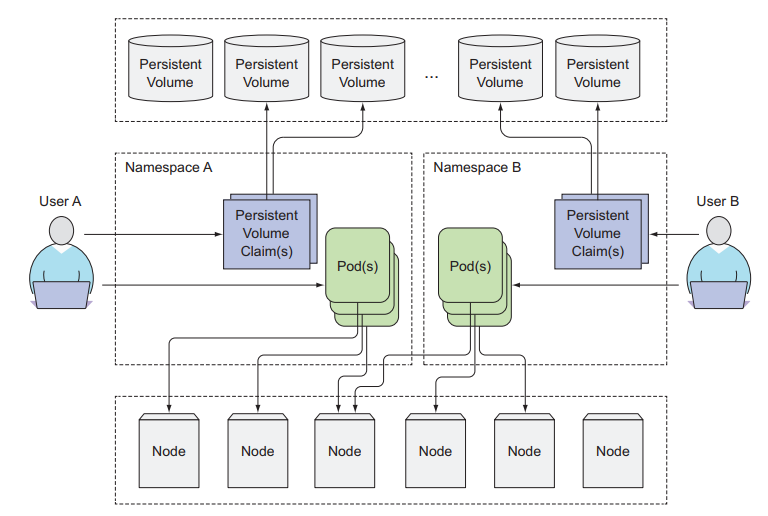

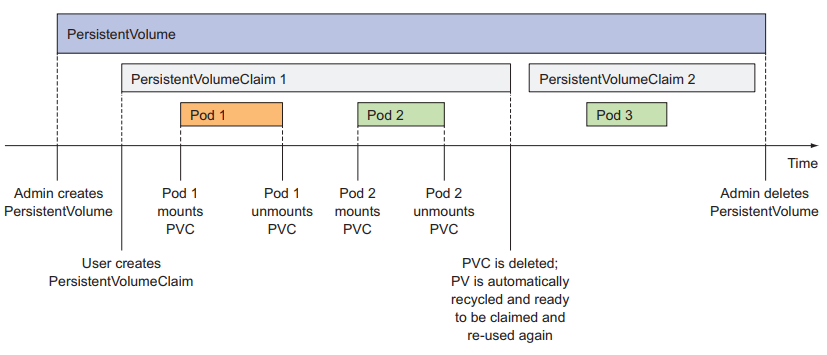

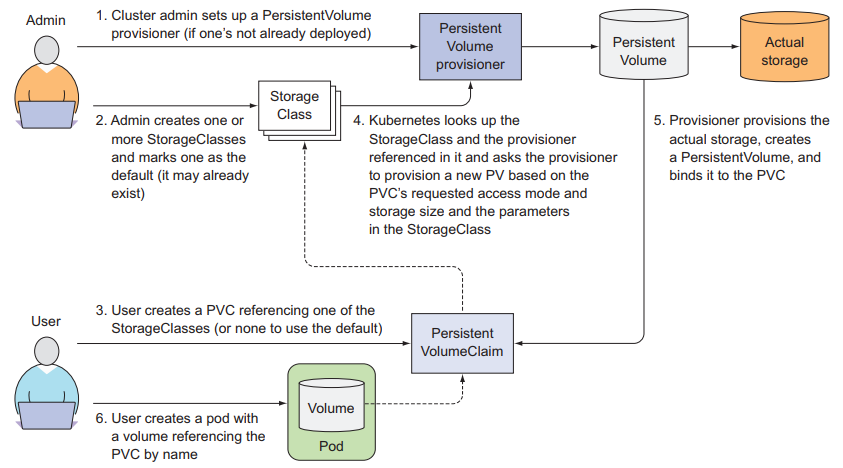

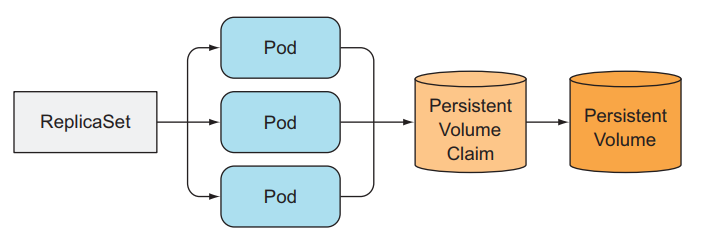

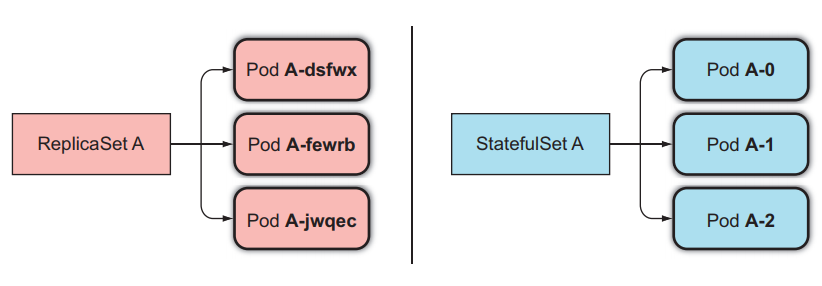

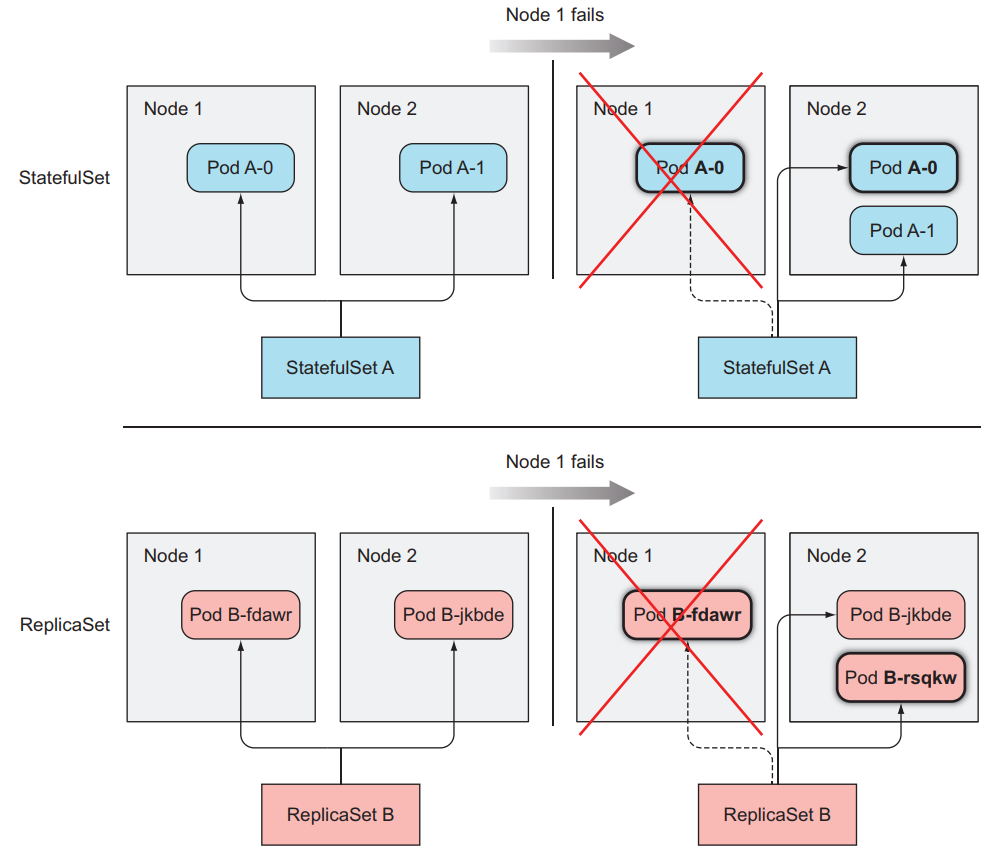

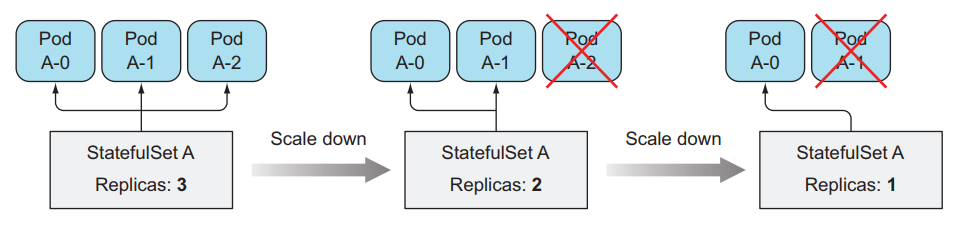

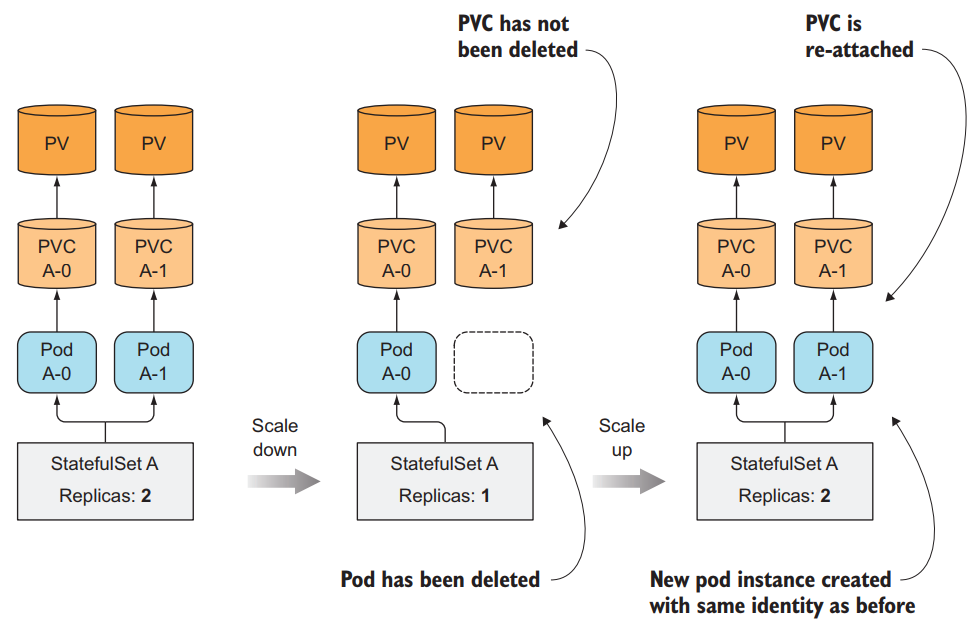

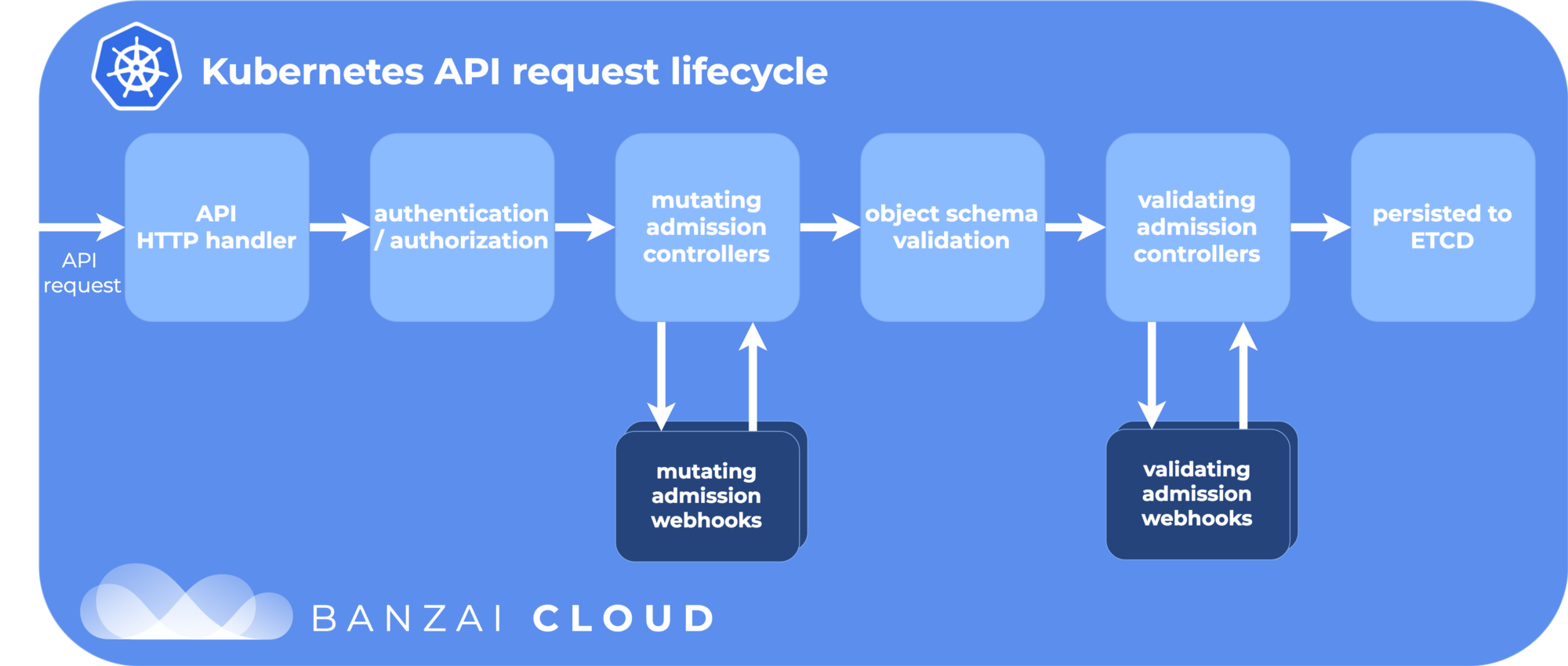

class: title, self-paced Kubernetes Volumes, Secrets, Configurations and Advanced Features<br/> .nav[*Self-paced version*] .debug[ ``` M slides/kube/stockage.md ?? slides/images/CloudNativeLandscape_Serverless_latest.png ?? slides/images/CloudNativeLandscape_latest.png ?? slides/images/Volume4.png ?? slides/images/Volume5.png ?? slides/images/containers_VMs_CPUs.png ?? slides/images/containers_vs_VMS.png ?? slides/images/logo-plb.jpg ?? slides/images/plb-logo.png ?? slides/images/plb.jpeg ?? slides/images/pods.png ?? slides/images/pods2.png ?? slides/images/pods3.png ?? slides/images/replicasets.png ?? slides/images/replicasets_statefulsets.png ?? slides/images/serverless_apigateway.png ?? slides/images/statefulsetPVC_scaledown.png ?? slides/images/statefulset_nodefail.png ?? slides/images/statefulset_scaledown.png ?? slides/images/stenciling-wall.jpg ?? slides/images/volume2.png ?? slides/images/volume6.png ?? slides/images/volumes1.png ?? slides/images/volumes3.png ?? slides/k8s/statefulsets2.md ``` These slides have been built from commit: c51d955 [shared/title.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/shared/title.md)] --- class: title, in-person Kubernetes Volumes, Secrets, Configurations and Advanced Features<br/><br/></br> **Slides: https://ryaxtech.github.io/kube.training/**<br/> **Chat: [Slack](https://join.slack.com/t/ryax-formation/shared_invite/enQtNjQ3OTA2NjkwODAwLTY0NzA4OGVjN2YyZWE0MTlhYTBkMTg1NGUxMGMyODE5NTM2MGJkNTk0NDk2NTU4YzQ0YjkzZTA0ZGI3NDQ0Yjc)** .debug[[shared/title.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/shared/title.md)] --- name: toc-chapter-1 ## Chapter 1 - [Introducing Volumes](#toc-introducing-volumes) - [Introducing PersistentVolumes and PersistentVolumeClaims](#toc-introducing-persistentvolumes-and-persistentvolumeclaims) - [Dynamic Provisioning of PersistentVolumes](#toc-dynamic-provisioning-of-persistentvolumes) - [Rook: orchestration of distributed storage](#toc-rook-orchestration-of-distributed-storage) - [Decoupling configuration with a ConfigMap](#toc-decoupling-configuration-with-a-configmap) - [Introducing Secrets](#toc-introducing-secrets) - [Monitoring with Prometheus and Grafana](#toc-monitoring-with-prometheus-and-grafana) .debug[(auto-generated TOC)] --- name: toc-chapter-2 ## Chapter 2 - [StatefulSets](#toc-statefulsets) - [Running a Consul cluster](#toc-running-a-consul-cluster) - [Highly available Persistent Volumes](#toc-highly-available-persistent-volumes) .debug[(auto-generated TOC)] --- name: toc-chapter-3 ## Chapter 3 - [Limiting Resource Usage with Kubernetes](#toc-limiting-resource-usage-with-kubernetes) - [Deploy Jupiter on Kubernetes](#toc-deploy-jupiter-on-kubernetes) - [Advanced scheduling with Kubernetes](#toc-advanced-scheduling-with-kubernetes) - [Autoscaling with Kubernetes](#toc-autoscaling-with-kubernetes) - [Big Data analytics on Kubernetes](#toc-big-data-analytics-on-kubernetes) - [Managing configuration](#toc-managing-configuration) - [Extending the Kubernetes API](#toc-extending-the-kubernetes-api) .debug[(auto-generated TOC)] --- name: toc-chapter-4 ## Chapter 4 - [Next steps](#toc-next-steps) - [Links and resources](#toc-links-and-resources) .debug[(auto-generated TOC)] .debug[[shared/toc.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/shared/toc.md)] --- class: pic .interstitial[] --- name: toc-introducing-volumes class: title Introducing Volumes .nav[ [Section précédente](#toc-) | [Retour table des matières](#toc-chapter-1) | [Section suivante](#toc-introducing-persistentvolumes-and-persistentvolumeclaims) ] .debug[(automatically generated title slide)] --- # Introducing Volumes - Kubernetes volumes are components of a pod and are thus defined in the pod’s specification much like containers. - They aren’t a standalone Kubernetes object and cannot be created or deleted on their own. - A volume is available to all containers in the pod, but it must be mounted in each container that needs to access it. - In each container, you can mount the volume in any location of its filesystem. .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Explaining volumes in an example - Containers no common storage - Containers sharing 2 volumes mounted in different mount paths .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- class: pic  .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- class: pic  .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Note - The volume /var/logs is not mounted in the ContentAgent container. - The container cannot access its files, even though the container and the volume are part of the same pod. - It’s not enough to define a volume in the pod; you need to define a VolumeMount inside the container’s spec also, if you want the container to be able to access it. .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Volume Types - A wide variety of volume types is available. Several are generic, while others are specific to the actual storage technologies used underneath. * `emptyDir`: A simple empty directory used for storing transient data. * `hostPath`: Used for mounting directories from the worker node’s filesystem into the pod. * `gitRepo`: A volume initialized by checking out the contents of a Git repository. * `nfs`: An NFS share mounted into the pod. * `gcePersistentDisk`, `awsElasticBlockStore`, `azureDisk`: Used for mounting cloud provider-specific storage. * `cinder`, `cephfs`, ...: Used for mounting other types of network storage. * `configMap`, `secret`, `downwardAPI`: Special types of volumes used to expose certain Kubernetes resources and cluster information to the pod. * `persistentVolumeClaim`: A way to use a pre- or dynamically provisioned persistent storage. .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Note - A single pod can use multiple volumes of different types at the same time - Each of the pod’s containers can either have the volume mounted or not. .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Example a pod using gitrepo volume .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- .exercise[ ```bash apiVersion: v1 kind: Pod metadata: name: gitrepo-volume-pod spec: containers: - image: nginx:alpine name: web-server volumeMounts: - name: html mountPath: /usr/share/nginx/html readOnly: true ports: - containerPort: 80 protocol: TCP volumes: - name: html gitRepo: repository: https://github.com/luksa/kubia-website-example.git revision: master directory: . ``` ] .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Decoupling pods from the underlying storage technology - Above case is against the basic idea of Kubernetes, which aims to hide the actual infrastructure from both the application and its developer. - When a developer needs a certain amount of persistent storage for their application, they should request it from Kubernetes. - The same way they request CPU, memory, and other resources when creating a pod. - The system administrator can configure the cluster so it can give the apps what they request. .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- class: pic .interstitial[] --- name: toc-introducing-persistentvolumes-and-persistentvolumeclaims class: title Introducing PersistentVolumes and PersistentVolumeClaims .nav[ [Section précédente](#toc-introducing-volumes) | [Retour table des matières](#toc-chapter-1) | [Section suivante](#toc-dynamic-provisioning-of-persistentvolumes) ] .debug[(automatically generated title slide)] --- # Introducing PersistentVolumes and PersistentVolumeClaims - Instead of the developer adding a technology-specific volume to their pod, it’s the cluster administrator who sets up the underlying storage and then registers it in Kubernetes by creating a PersistentVolume resource through the Kubernetes API server. - When creating the PersistentVolume, the admin specifies its size and the access modes it supports. .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Introducing PersistentVolumes and PersistentVolumeClaims - When a cluster user needs to use persistent storage in one of their pods, they first create a PersistentVolumeClaim manifest, specifying the minimum size and the access mode they require. - The user then submits the PersistentVolumeClaim manifest to the Kubernetes API server, and Kubernetes finds the appropriate PersistentVolume and binds the volume to the claim. - The PersistentVolumeClaim can then be used as one of the volumes inside a pod. Other users cannot use the same PersistentVolume until it has been released by deleting the bound PersistentVolumeClaim. .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Example of PersistentVolumes and PersistentVolumeClaims .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- class: pic  .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## PersistentVolumes and Namespaces .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- class: pic  .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Lifespan of PersistentVolume and PersistentVolumeClaims .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- class: pic  .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- class: pic .interstitial[] --- name: toc-dynamic-provisioning-of-persistentvolumes class: title Dynamic Provisioning of PersistentVolumes .nav[ [Section précédente](#toc-introducing-persistentvolumes-and-persistentvolumeclaims) | [Retour table des matières](#toc-chapter-1) | [Section suivante](#toc-rook-orchestration-of-distributed-storage) ] .debug[(automatically generated title slide)] --- # Dynamic Provisioning of PersistentVolumes - We have seen how using PersistentVolumes and PersistentVolumeClaims makes it easy to obtain persistent storage without the developer having to deal with the actual storage technology used underneath. - But this still requires a cluster administrator to provision the actual storage up front. .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Dynamic Provisioning of PersistentVolumes - Luckily, Kubernetes can also perform this job automatically through dynamic provisioning of PersistentVolumes. - The cluster admin, instead of creating PersistentVolumes, can deploy a PersistentVolume provisioner and define one or more StorageClass objects to let users choose what type of PersistentVolume they want. - The users can refer to the StorageClass in their PersistentVolumeClaims and the provisioner will take that into account when provisioning the persistent storage. .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- class: pic  .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- class: pic .interstitial[] --- name: toc-rook-orchestration-of-distributed-storage class: title Rook: orchestration of distributed storage .nav[ [Section précédente](#toc-dynamic-provisioning-of-persistentvolumes) | [Retour table des matières](#toc-chapter-1) | [Section suivante](#toc-decoupling-configuration-with-a-configmap) ] .debug[(automatically generated title slide)] --- # Rook: orchestration of distributed storage - Rook is an open source orchestrator for distributed storage systems. - Rook turns distributed storage software into a self-managing, self-scaling, and self-healing storage services. - It does this by automating deployment, bootstrapping, configuration, provisioning, scaling, upgrading, migration, disaster recovery, monitoring, and resource management. .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Rook: orchestration of distributed storage - Rook is focused initially on orchestrating Ceph on-top of Kubernetes. Ceph is a distributed storage system that provides file, block and object storage and is deployed in large scale production clusters. - Rook is hosted by the Cloud Native Computing Foundation (CNCF) as an inception level project. .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Example of dynamic provisioning of PersistentVolumes using Rook .exercise[ ```bash git clone https://github.com/rook/rook.git cd rook/cluster/examples/kubernetes/ceph kubectl create -f common.yaml kubectl create -f operator.yaml kubectl create -f cluster-test.yaml ``` - check to see everything is running as expected ```bash kubectl get pods -n rook-ceph ``` ] .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Example of dynamic provisioning of PersistentVolumes using Rook - Block storage allows you to mount storage to a single pod. - Let's see how to build a simple, multi-tier web application on Kubernetes using persistent volumes enabled by Rook. -- - Before Rook can start provisioning storage, a StorageClass and its storage pool need to be created. - This is needed for Kubernetes to interoperate with Rook for provisioning persistent volumes. .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Example of dynamic provisioning of PersistentVolumes using Rook .exercise[ - Create the pool and storage class: ```bash kubectl create -f storageclass.yaml ``` ] - Consume the storage with wordpress sample - We create a sample app to consume the block storage provisioned by Rook with the classic wordpress and mysql apps. - Both of these apps will make use of block volumes provisioned by Rook. .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Example of dynamic provisioning of PersistentVolumes using Rook .exercise[ - Start mysql and wordpress from the cluster/examples/kubernetes folder: ```bash kubectl create -f mysql.yaml kubectl create -f wordpress.yaml ``` - Both of these apps create a block volume and mount it to their respective pod. You can see the Kubernetes volume claims by running the following: ```bash kubectl get pvc ``` - You should see something like this: ```bash NAME STATUS VOLUME CAPACITY ACCESSMODES AGE mysql-pv-claim Bound pvc-95402dbc-efc0-11e6-bc9a-0cc47a3459ee 20Gi RWO 1m wp-pv-claim Bound pvc-39e43169-efc1-11e6-bc9a-0cc47a3459ee 20Gi RWO 1m ``` ] .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Example of dynamic provisioning of PersistentVolumes using Rook .exercise[ - Once the wordpress and mysql pods are in the Running state, get the cluster IP of the wordpress app and enter it in your browser along with the port: ```bash kubectl get svc wordpress ``` ] You should see the wordpress app running. .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Teardown .exercise[ - To clean up all the artifacts created by the block demo: ```bash kubectl delete -f wordpress.yaml kubectl delete -f mysql.yaml kubectl delete -n rook-ceph cephblockpools.ceph.rook.io replicapool kubectl delete storageclass rook-ceph-block ``` ] .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Launch another example of dynamic provisioning .exercise[ - Copy the file from here : https://github.com/zonca/jupyterhub-deploy-kubernetes-jetstream/blob/master/storage_rook/alpine-rook.yaml - Modify it so that it fits the specification you have at your cluster and run it using: ```bash kubectl create -f alpine-rook.yaml ``` ] .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Launch another example of dynamic provisioning - It is a very small pod with Alpine Linux that creates a 2 GB volume from Rook and mounts it on /data. - This creates a Pod with Alpine Linux that requests a Persistent Volume Claim to be mounted under /data. - The Persistent Volume Claim specified the type of storage and its size. - Once the Pod is created, it asks the Persistent Volume Claim to actually request Rook to prepare a Persistent Volume that is then mounted into the Pod. .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Launch another example of dynamic provisioning - We can verify the Persistent Volumes are created and associated with the pod, check: .exercise[ ```bash kubectl get pv kubectl get pvc kubectl get logs alpine ``` - Get a shell in the pod with: ```bash kubectl exec -it alpine -- /bin/sh ``` - Access /data/ and write some files. - Exit the shell - Now delete the pod and see if you can retrieve the data you wrote. ] .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- ## Launch another example of dynamic provisioning .exercise[ - How could have we retrieved the data in the last case? - Let's change the alpine-rook.yaml to `kind:deployment` write some files and kill again the pod to see what happens. ] .debug[[kube/stockage.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/stockage.md)] --- class: pic .interstitial[] --- name: toc-decoupling-configuration-with-a-configmap class: title Decoupling configuration with a ConfigMap .nav[ [Section précédente](#toc-rook-orchestration-of-distributed-storage) | [Retour table des matières](#toc-chapter-1) | [Section suivante](#toc-introducing-secrets) ] .debug[(automatically generated title slide)] --- # Decoupling configuration with a ConfigMap - The whole point of an app’s configuration is to keep the config options that vary between environments, or change frequently, separate from the application’s source code. - If you think of a pod descriptor as source code for your app (it defines how to compose the individual components into a functioning system), it’s clear you should move the configuration out of the pod description. .debug[[kube/configs.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/configs.md)] --- ## Introducing ConfigMap - Kubernetes allows separating configuration options into a separate object called a ConfigMap, which is a map containing key/value pairs with the values ranging from short literals to full config files. - An application doesn’t need to read the ConfigMap directly or even know that it exists. The contents of the map are instead passed to containers as either environment variables or as files in a volume. .debug[[kube/configs.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/configs.md)] --- ## Introducing ConfigMap - You can define the map’s entries by passing literals to the kubectl command or you can create the ConfigMap from files stored on your disk. - Use a simple literal first: .exercise[ ```bash kubectl create configmap fortune-config --from-literal=sleep-interval=25 ``` - NOTE ConfigMap keys must be a valid DNS subdomain (they may only contain alphanumeric characters, dashes, underscores, and dots). They may optionally include a leading dot. ] .debug[[kube/configs.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/configs.md)] --- ## Explaining Configmaps in an example - Execute the example described here: https://kubernetes.io/docs/tutorials/configuration/configure-redis-using-configmap/ .debug[[kube/configs.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/configs.md)] --- class: pic .interstitial[] --- name: toc-introducing-secrets class: title Introducing Secrets .nav[ [Section précédente](#toc-decoupling-configuration-with-a-configmap) | [Retour table des matières](#toc-chapter-1) | [Section suivante](#toc-monitoring-with-prometheus-and-grafana) ] .debug[(automatically generated title slide)] --- # Introducing Secrets - Kubernetes provides a separate object called Secret. Secrets are much like ConfigMaps - They’re also maps that hold key-value pairs. They can be used the same way as a ConfigMap. - You can Pass Secret entries to the container as environment variables - Expose Secret entries as files in a volume .debug[[kube/configs.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/configs.md)] --- ## Introducing Secrets - Kubernetes helps keep your Secrets safe by making sure each Secret is only distributed to the nodes that run the pods that need access to the Secret. - Also, on the nodes themselves, Secrets are always stored in memory and never written to physical storage, which would require wiping the disks after deleting the Secrets from them. .debug[[kube/configs.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/configs.md)] --- ## Introducing Secrets - On the master node itself etcd stores Secrets in encrypted form, making the system much more secure. Because of this, it’s imperative you properly choose when to use a Secret or a ConfigMap. Choosing between them is simple: * Use a ConfigMap to store non-sensitive, plain configuration data. -- * Use a Secret to store any data that is sensitive in nature and needs to be kept under key. If a config file includes both sensitive and not-sensitive data, you should store the file in a Secret. .debug[[kube/configs.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/configs.md)] --- ## Exercises using Secrets - Some initial exercises using Secrets can be found here: https://kubernetes.io/docs/concepts/configuration/secret/ .debug[[kube/configs.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/configs.md)] --- .debug[[kube/configs.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/configs.md)] --- class: pic .interstitial[] --- name: toc-monitoring-with-prometheus-and-grafana class: title Monitoring with Prometheus and Grafana .nav[ [Section précédente](#toc-introducing-secrets) | [Retour table des matières](#toc-chapter-1) | [Section suivante](#toc-statefulsets) ] .debug[(automatically generated title slide)] --- # Monitoring with Prometheus and Grafana - Prometheus, for monitoring - Grafana, for displaying the metrics and play with them. .debug[[kube/monitoring.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/monitoring.md)] --- ## Kube Prometheus Kube Prometheus is a repository git that allows to install a monitoring stack Prometheus+Grafana for kubernetes. It can configure also Grafana to integrate useful graphs. .exercise[ ```bash git clone https://github.com/coreos/prometheus-operator.git cd prometheus-operator/contrib/kube-prometheus/ kubectl create -f manifests/ ``` ] .debug[[kube/monitoring.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/monitoring.md)] --- ## Grafana .exercise[ - Open the grafana service externally, connect on it with your web browser - To login you can use : admin/admin - Click on "Home" at the top of the screen and choose the *"Pods"* dashboard. - What is the memory usage of the registery pod ? - What is the cost of prometheus daemonset pods ? ] .debug[[kube/monitoring.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/monitoring.md)] --- ## Reset ```bash kubectl delete -f manifests/ ``` .debug[[kube/monitoring.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/monitoring.md)] --- class: pic .interstitial[] --- name: toc-statefulsets class: title StatefulSets .nav[ [Section précédente](#toc-monitoring-with-prometheus-and-grafana) | [Retour table des matières](#toc-chapter-2) | [Section suivante](#toc-running-a-consul-cluster) ] .debug[(automatically generated title slide)] --- # StatefulSets - A StatefulSet allows to have a group of pods that have a stable name and state. - What is the difference with ReplicaSet (deployment) ? - A ReplicaSet is like managing a cattle of cows : we do not care about the names of cows, we just want to know how many we have. If a cow is ill we replace her. - A StatefulSet is like managing a group of domestic animals : we give them names and we cannot replace them easily. If we have to replace one we need to find one with the same name and the same appearance. .debug[[kube/statefulsets.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/statefulsets.md)] --- ## Replicate Stateful pods  Because of the ReplicaSet template system, we can only give one and only name for the PersistentVolumeClaim. For a ReplicaSet, all replicas use the same PersistentVolumeClaim ! .debug[[kube/statefulsets.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/statefulsets.md)] --- ## The StatefulSets allow us to have unique names  What happens if a node dies ? .debug[[kube/statefulsets.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/statefulsets.md)] --- class: pic  .debug[[kube/statefulsets.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/statefulsets.md)] --- ## Statefulset, change of the replicas number  The pod with the higher ID is destroyed first! What happens with the attached PVC? .debug[[kube/statefulsets.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/statefulsets.md)] --- class: pic  .debug[[kube/statefulsets.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/statefulsets.md)] --- ## Statefulset exercises - The schemas have been taken from the book of Marko Luksa "Kubernetes in Action" .exercise[ - *mehdb* is database (*meh* in anglais). It replicates automatically the data between each instance. ```bash wget https://gist.githubusercontent.com/glesserd/a0db0439e69426d92c632fb5c9bcba1c/raw/56b05fcdf9d4d1bbdf5f5cdca3fc104d7dca7d24/app.yaml ``` - Let's check the YAML... ] Attention ! This application does not work... Indeed the data are not replicated. But it is not important for our tests with Kubernetes. .debug[[kube/statefulsets.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/statefulsets.md)] --- ## Deployment .exercise[ - Deploy it ```bash kubectl get statefulset kubectl get sts ``` - We scale the bdd ```bash kubectl scale sts mehdb --replicas=4 ``` - How did everything go ? ```bash kubectl get sts kubectl get pvc ``` ] .debug[[kube/statefulsets.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/statefulsets.md)] --- ## Resistance to crashes .exercise[ - Let's kill a pod! ```bash kubectl delete pod mehdb-1 ``` - Which pod is going to be re-created ? ```bash kubectl get pod ``` ] .debug[[kube/statefulsets.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/statefulsets.md)] --- ## Scale down .exercise[ - Let's scale down: ```bash kubectl scale sts mehdb --replicas=2 ``` - Did everything go well ? ```bash kubectl get sts kubectl get pvc ``` - The PVC are still there as expected ! ] .debug[[kube/statefulsets.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/statefulsets.md)] --- ## Reset .exercise[ - Reset: ```bash kubectl delete -f app.yaml ``` * Do not forget to delete the PVC !!!* ] .debug[[kube/statefulsets.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/statefulsets.md)] --- ## Stateful sets - Stateful sets are a type of resource in the Kubernetes API (like pods, deployments, services...) - They offer mechanisms to deploy scaled stateful applications - At a first glance, they look like *deployments*: - a stateful set defines a pod spec and a number of replicas *R* - it will make sure that *R* copies of the pod are running - that number can be changed while the stateful set is running - updating the pod spec will cause a rolling update to happen - But they also have some significant differences .debug[[k8s/statefulsets2.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/statefulsets2.md)] --- ## Stateful sets unique features - Pods in a stateful set are numbered (from 0 to *R-1*) and ordered - They are started and updated in order (from 0 to *R-1*) - A pod is started (or updated) only when the previous one is ready - They are stopped in reverse order (from *R-1* to 0) - Each pod know its identity (i.e. which number it is in the set) - Each pod can discover the IP address of the others easily - The pods can have persistent volumes attached to them 🤔 Wait a minute ... Can't we already attach volumes to pods and deployments? .debug[[k8s/statefulsets2.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/statefulsets2.md)] --- ## Persistent Volume Claims and Stateful sets - The pods in a stateful set can define a `volumeClaimTemplate` - A `volumeClaimTemplate` will dynamically create one Persistent Volume Claim per pod - Each pod will therefore have its own volume - These volumes are numbered (like the pods) - When updating the stateful set (e.g. image upgrade), each pod keeps its volume - When pods get rescheduled (e.g. node failure), they keep their volume (this requires a storage system that is not node-local) - These volumes are not automatically deleted (when the stateful set is scaled down or deleted) .debug[[k8s/statefulsets2.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/statefulsets2.md)] --- ## Stateful set recap - A Stateful sets manages a number of identical pods (like a Deployment) - These pods are numbered, and started/upgraded/stopped in a specific order - These pods are aware of their number (e.g., #0 can decide to be the primary, and #1 can be secondary) - These pods can find the IP addresses of the other pods in the set (through a *headless service*) - These pods can each have their own persistent storage (Deployments cannot do that) .debug[[k8s/statefulsets2.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/statefulsets2.md)] --- class: pic .interstitial[] --- name: toc-running-a-consul-cluster class: title Running a Consul cluster .nav[ [Section précédente](#toc-statefulsets) | [Retour table des matières](#toc-chapter-2) | [Section suivante](#toc-highly-available-persistent-volumes) ] .debug[(automatically generated title slide)] --- # Running a Consul cluster - Here is a good use-case for Stateful sets! - We are going to deploy a Consul cluster with 3 nodes - Consul is a highly-available key/value store (like etcd or Zookeeper) - One easy way to bootstrap a cluster is to tell each node: - the addresses of other nodes - how many nodes are expected (to know when quorum is reached) .debug[[k8s/statefulsets2.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/statefulsets2.md)] --- ## Bootstrapping a Consul cluster *After reading the Consul documentation carefully (and/or asking around), we figure out the minimal command-line to run our Consul cluster.* ``` consul agent -data=dir=/consul/data -client=0.0.0.0 -server -ui \ -bootstrap-expect=3 \ -retry-join=`X.X.X.X` \ -retry-join=`Y.Y.Y.Y` ``` - Replace X.X.X.X and Y.Y.Y.Y with the addresses of other nodes - The same command-line can be used on all nodes (convenient!) .debug[[k8s/statefulsets2.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/statefulsets2.md)] --- ## Cloud Auto-join - Since version 1.4.0, Consul can use the Kubernetes API to find its peers - This is called [Cloud Auto-join] - Instead of passing an IP address, we need to pass a parameter like this: ``` consul agent -retry-join "provider=k8s label_selector=\"app=consul\"" ``` - Consul needs to be able to talk to the Kubernetes API - We can provide a `kubeconfig` file - If Consul runs in a pod, it will use the *service account* of the pod [Cloud Auto-join]: https://www.consul.io/docs/agent/cloud-auto-join.html#kubernetes-k8s- .debug[[k8s/statefulsets2.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/statefulsets2.md)] --- ## Setting up Cloud auto-join - We need to create a service account for Consul - We need to create a role that can `list` and `get` pods - We need to bind that role to the service account - And of course, we need to make sure that Consul pods use that service account .debug[[k8s/statefulsets2.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/statefulsets2.md)] --- ## Putting it all together - The file `k8s/consul.yaml` defines the required resources (service account, cluster role, cluster role binding, service, stateful set) - It has a few extra touches: - a `podAntiAffinity` prevents two pods from running on the same node - a `preStop` hook makes the pod leave the cluster when shutdown gracefully This was inspired by this [excellent tutorial](https://github.com/kelseyhightower/consul-on-kubernetes) by Kelsey Hightower. Some features from the original tutorial (TLS authentication between nodes and encryption of gossip traffic) were removed for simplicity. .debug[[k8s/statefulsets2.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/statefulsets2.md)] --- ## Running our Consul cluster - We'll use the provided YAML file .exercise[ - Create the stateful set and associated service: ```bash kubectl apply -f ~/container.training/k8s/consul.yaml ``` - Check the logs as the pods come up one after another: ```bash stern consul ``` <!-- ```wait Synced node info``` ```keys ^C``` --> - Check the health of the cluster: ```bash kubectl exec consul-0 consul members ``` ] .debug[[k8s/statefulsets2.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/statefulsets2.md)] --- ## Caveats - We haven't used a `volumeClaimTemplate` here - That's because we don't have a storage provider yet (except if you're running this on your own and your cluster has one) - What happens if we lose a pod? - a new pod gets rescheduled (with an empty state) - the new pod tries to connect to the two others - it will be accepted (after 1-2 minutes of instability) - and it will retrieve the data from the other pods .debug[[k8s/statefulsets2.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/statefulsets2.md)] --- ## Failure modes - What happens if we lose two pods? - manual repair will be required - we will need to instruct the remaining one to act solo - then rejoin new pods - What happens if we lose three pods? (aka all of them) - we lose all the data (ouch) - If we run Consul without persistent storage, backups are a good idea! .debug[[k8s/statefulsets2.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/statefulsets2.md)] --- class: pic .interstitial[] --- name: toc-highly-available-persistent-volumes class: title Highly available Persistent Volumes .nav[ [Section précédente](#toc-running-a-consul-cluster) | [Retour table des matières](#toc-chapter-2) | [Section suivante](#toc-limiting-resource-usage-with-kubernetes) ] .debug[(automatically generated title slide)] --- # Highly available Persistent Volumes - How can we achieve true durability? - How can we store data that would survive the loss of a node? -- - We need to use Persistent Volumes backed by highly available storage systems - There are many ways to achieve that: - leveraging our cloud's storage APIs - using NAS/SAN systems or file servers - distributed storage systems -- - We are going to see one distributed storage system in action .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Our test scenario - We will set up a distributed storage system on our cluster - We will use it to deploy a SQL database (PostgreSQL) - We will insert some test data in the database - We will disrupt the node running the database - We will see how it recovers .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Portworx - Portworx is a *commercial* persistent storage solution for containers - It works with Kubernetes, but also Mesos, Swarm ... - It provides [hyper-converged](https://en.wikipedia.org/wiki/Hyper-converged_infrastructure) storage (=storage is provided by regular compute nodes) - We're going to use it here because it can be deployed on any Kubernetes cluster (it doesn't require any particular infrastructure) - We don't endorse or support Portworx in any particular way (but we appreciate that it's super easy to install!) .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## A useful reminder - We're installing Portworx because we need a storage system - If you are using AKS, EKS, GKE ... you already have a storage system (but you might want another one, e.g. to leverage local storage) - If you have setup Kubernetes yourself, there are other solutions available too - on premises, you can use a good old SAN/NAS - on a private cloud like OpenStack, you can use e.g. Cinder - everywhere, you can use other systems, e.g. Gluster, StorageOS .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Portworx requirements - Kubernetes cluster ✔️ - Optional key/value store (etcd or Consul) ❌ - At least one available block device ❌ .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## The key-value store - In the current version of Portworx (1.4) it is recommended to use etcd or Consul - But Portworx also has beta support for an embedded key/value store - For simplicity, we are going to use the latter option (but if we have deployed Consul or etcd, we can use that, too) .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## One available block device - Block device = disk or partition on a disk - We can see block devices with `lsblk` (or `cat /proc/partitions` if we're old school like that!) - If we don't have a spare disk or partition, we can use a *loop device* - A loop device is a block device actually backed by a file - These are frequently used to mount ISO (CD/DVD) images or VM disk images .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Setting up a loop device - We are going to create a 10 GB (empty) file on each node - Then make a loop device from it, to be used by Portworx .exercise[ - Create a 10 GB file on each node: ```bash for N in $(seq 1 4); do ssh node$N sudo truncate --size 10G /portworx.blk; done ``` (If SSH asks to confirm host keys, enter `yes` each time.) - Associate the file to a loop device on each node: ```bash for N in $(seq 1 4); do ssh node$N sudo losetup /dev/loop4 /portworx.blk; done ``` ] .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Installing Portworx - To install Portworx, we need to go to https://install.portworx.com/ - This website will ask us a bunch of questions about our cluster - Then, it will generate a YAML file that we should apply to our cluster -- - Or, we can just apply that YAML file directly (it's in `k8s/portworx.yaml`) .exercise[ - Install Portworx: ```bash kubectl apply -f ~/container.training/k8s/portworx.yaml ``` ] .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- class: extra-details ## Generating a custom YAML file If you want to generate a YAML file tailored to your own needs, the easiest way is to use https://install.portworx.com/. FYI, this is how we obtained the YAML file used earlier: ``` KBVER=$(kubectl version -o json | jq -r .serverVersion.gitVersion) BLKDEV=/dev/loop4 curl https://install.portworx.com/1.4/?kbver=$KBVER&b=true&s=$BLKDEV&c=px-workshop&stork=true&lh=true ``` If you want to use an external key/value store, add one of the following: ``` &k=etcd://`XXX`:2379 &k=consul://`XXX`:8500 ``` ... where `XXX` is the name or address of your etcd or Consul server. .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Waiting for Portworx to be ready - The installation process will take a few minutes .exercise[ - Check out the logs: ```bash stern -n kube-system portworx ``` - Wait until it gets quiet (you should see `portworx service is healthy`, too) <!-- ```longwait PX node status reports portworx service is healthy``` ```keys ^C``` --> ] .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Dynamic provisioning of persistent volumes - We are going to run PostgreSQL in a Stateful set - The Stateful set will specify a `volumeClaimTemplate` - That `volumeClaimTemplate` will create Persistent Volume Claims - Kubernetes' [dynamic provisioning](https://kubernetes.io/docs/concepts/storage/dynamic-provisioning/) will satisfy these Persistent Volume Claims (by creating Persistent Volumes and binding them to the claims) - The Persistent Volumes are then available for the PostgreSQL pods .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Storage Classes - It's possible that multiple storage systems are available - Or, that a storage system offers multiple tiers of storage (SSD vs. magnetic; mirrored or not; etc.) - We need to tell Kubernetes *which* system and tier to use - This is achieved by creating a Storage Class - A `volumeClaimTemplate` can indicate which Storage Class to use - It is also possible to mark a Storage Class as "default" (it will be used if a `volumeClaimTemplate` doesn't specify one) .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Our default Storage Class This is our Storage Class (in `k8s/storage-class.yaml`): ```yaml kind: StorageClass apiVersion: storage.k8s.io/v1beta1 metadata: name: portworx-replicated annotations: storageclass.kubernetes.io/is-default-class: "true" provisioner: kubernetes.io/portworx-volume parameters: repl: "2" priority_io: "high" ``` - It says "use Portworx to create volumes" - It tells Portworx to "keep 2 replicas of these volumes" - It marks the Storage Class as being the default one .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Creating our Storage Class - Let's apply that YAML file! .exercise[ - Create the Storage Class: ```bash kubectl apply -f ~/container.training/k8s/storage-class.yaml ``` - Check that it is now available: ```bash kubectl get sc ``` ] It should show as `portworx-replicated (default)`. .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Our Postgres Stateful set - The next slide shows `k8s/postgres.yaml` - It defines a Stateful set - With a `volumeClaimTemplate` requesting a 1 GB volume - That volume will be mounted to `/var/lib/postgresql/data` - There is another little detail: we enable the `stork` scheduler - The `stork` scheduler is optional (it's specific to Portworx) - It helps the Kubernetes scheduler to colocate the pod with its volume (see [this blog post](https://portworx.com/stork-storage-orchestration-kubernetes/) for more details about that) .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- .small[ ```yaml apiVersion: apps/v1 kind: StatefulSet metadata: name: postgres spec: selector: matchLabels: app: postgres serviceName: postgres template: metadata: labels: app: postgres spec: schedulerName: stork containers: - name: postgres image: postgres:10.5 volumeMounts: - mountPath: /var/lib/postgresql/data name: postgres volumeClaimTemplates: - metadata: name: postgres spec: accessModes: ["ReadWriteOnce"] resources: requests: storage: 1Gi ``` ] .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Creating the Stateful set - Before applying the YAML, watch what's going on with `kubectl get events -w` .exercise[ - Apply that YAML: ```bash kubectl apply -f ~/container.training/k8s/postgres.yaml ``` <!-- ```hide kubectl wait pod postgres-0 --for condition=ready``` --> ] .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Testing our PostgreSQL pod - We will use `kubectl exec` to get a shell in the pod - Good to know: we need to use the `postgres` user in the pod .exercise[ - Get a shell in the pod, as the `postgres` user: ```bash kubectl exec -ti postgres-0 su postgres ``` <!-- autopilot prompt detection expects $ or # at the beginning of the line. ```wait postgres@postgres``` ```keys PS1="\u@\h:\w\n\$ "``` ```keys ^J``` --> - Check that default databases have been created correctly: ```bash psql -l ``` ] (This should show us 3 lines: postgres, template0, and template1.) .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Inserting data in PostgreSQL - We will create a database and populate it with `pgbench` .exercise[ - Create a database named `demo`: ```bash createdb demo ``` - Populate it with `pgbench`: ```bash pgbench -i -s 10 demo ``` ] - The `-i` flag means "create tables" - The `-s 10` flag means "create 10 x 100,000 rows" .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Checking how much data we have now - The `pgbench` tool inserts rows in table `pgbench_accounts` .exercise[ - Check that the `demo` base exists: ```bash psql -l ``` - Check how many rows we have in `pgbench_accounts`: ```bash psql demo -c "select count(*) from pgbench_accounts" ``` <!-- ```keys ^D``` --> ] (We should see a count of 1,000,000 rows.) .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Find out which node is hosting the database - We can find that information with `kubectl get pods -o wide` .exercise[ - Check the node running the database: ```bash kubectl get pod postgres-0 -o wide ``` ] We are going to disrupt that node. -- By "disrupt" we mean: "disconnect it from the network". .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Disconnect the node - We will use `iptables` to block all traffic exiting the node (except SSH traffic, so we can repair the node later if needed) .exercise[ - SSH to the node to disrupt: ```bash ssh `nodeX` ``` - Allow SSH traffic leaving the node, but block all other traffic: ```bash sudo iptables -I OUTPUT -p tcp --sport 22 -j ACCEPT sudo iptables -I OUTPUT 2 -j DROP ``` ] .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Check that the node is disconnected .exercise[ - Check that the node can't communicate with other nodes: ``` ping node1 ``` - Logout to go back on `node1` <!-- ```keys ^D``` --> - Watch the events unfolding with `kubectl get events -w` and `kubectl get pods -w` ] - It will take some time for Kubernetes to mark the node as unhealthy - Then it will attempt to reschedule the pod to another node - In about a minute, our pod should be up and running again .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Check that our data is still available - We are going to reconnect to the (new) pod and check .exercise[ - Get a shell on the pod: ```bash kubectl exec -ti postgres-0 su postgres ``` <!-- ```wait postgres@postgres``` ```keys PS1="\u@\h:\w\n\$ "``` ```keys ^J``` --> - Check the number of rows in the `pgbench_accounts` table: ```bash psql demo -c "select count(*) from pgbench_accounts" ``` <!-- ```keys ^D``` --> ] .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Double-check that the pod has really moved - Just to make sure the system is not bluffing! .exercise[ - Look at which node the pod is now running on ```bash kubectl get pod postgres-0 -o wide ``` ] .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Re-enable the node - Let's fix the node that we disconnected from the network .exercise[ - SSH to the node: ```bash ssh `nodeX` ``` - Remove the iptables rule blocking traffic: ```bash sudo iptables -D OUTPUT 2 ``` ] .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- class: extra-details ## A few words about this PostgreSQL setup - In a real deployment, you would want to set a password - This can be done by creating a `secret`: ``` kubectl create secret generic postgres \ --from-literal=password=$(base64 /dev/urandom | head -c16) ``` - And then passing that secret to the container: ```yaml env: - name: POSTGRES_PASSWORD valueFrom: secretKeyRef: name: postgres key: password ``` .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- class: extra-details ## Troubleshooting Portworx - If we need to see what's going on with Portworx: ``` PXPOD=$(kubectl -n kube-system get pod -l name=portworx -o json | jq -r .items[0].metadata.name) kubectl -n kube-system exec $PXPOD -- /opt/pwx/bin/pxctl status ``` - We can also connect to Lighthouse (a web UI) - check the port with `kubectl -n kube-system get svc px-lighthouse` - connect to that port - the default login/password is `admin/Password1` - then specify `portworx-service` as the endpoint .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- class: extra-details ## Removing Portworx - Portworx provides a storage driver - It needs to place itself "above" the Kubelet (it installs itself straight on the nodes) - To remove it, we need to do more than just deleting its Kubernetes resources - It is done by applying a special label: ``` kubectl label nodes --all px/enabled=remove --overwrite ``` - Then removing a bunch of local files: ``` sudo chattr -i /etc/pwx/.private.json sudo rm -rf /etc/pwx /opt/pwx ``` (on each node where Portworx was running) .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- class: extra-details ## Dynamic provisioning without a provider - What if we want to use Stateful sets without a storage provider? - We will have to create volumes manually (by creating Persistent Volume objects) - These volumes will be automatically bound with matching Persistent Volume Claims - We can use local volumes (essentially bind mounts of host directories) - Of course, these volumes won't be available in case of node failure - Check [this blog post](https://kubernetes.io/blog/2018/04/13/local-persistent-volumes-beta/) for more information and gotchas .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- ## Acknowledgements The Portworx installation tutorial, and the PostgreSQL example, were inspired by [Portworx examples on Katacoda](https://katacoda.com/portworx/scenarios/), in particular: - [installing Portworx on Kubernetes](https://www.katacoda.com/portworx/scenarios/deploy-px-k8s) (with adapatations to use a loop device and an embedded key/value store) - [persistent volumes on Kubernetes using Portworx](https://www.katacoda.com/portworx/scenarios/px-k8s-vol-basic) (with adapatations to specify a default Storage Class) - [HA PostgreSQL on Kubernetes with Portworx](https://www.katacoda.com/portworx/scenarios/px-k8s-postgres-all-in-one) (with adaptations to use a Stateful Set and simplify PostgreSQL's setup) .debug[[k8s/portworx.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/portworx.md)] --- class: pic .interstitial[] --- name: toc-limiting-resource-usage-with-kubernetes class: title Limiting Resource Usage with Kubernetes .nav[ [Section précédente](#toc-highly-available-persistent-volumes) | [Retour table des matières](#toc-chapter-3) | [Section suivante](#toc-deploy-jupiter-on-kubernetes) ] .debug[(automatically generated title slide)] --- # Limiting Resource Usage with Kubernetes - To declare resource usage limitation per namespace for all containers that running on this namespace we will use **ResourceQuotas**. - We will follow the exercises here: https://kubernetes.io/docs/tasks/administer-cluster/manage-resources/quota-memory-cpu-namespace/ - To declare resource usage limatation per namespace for each container that running on the namespace we will use **LimitRange**. - We will follow the exercise here: https://kubernetes.io/docs/tasks/administer-cluster/manage-resources/memory-constraint-namespace/ .debug[[kube/advanced.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/advanced.md)] --- class: pic .interstitial[] --- name: toc-deploy-jupiter-on-kubernetes class: title Deploy Jupiter on Kubernetes .nav[ [Section précédente](#toc-limiting-resource-usage-with-kubernetes) | [Retour table des matières](#toc-chapter-3) | [Section suivante](#toc-advanced-scheduling-with-kubernetes) ] .debug[(automatically generated title slide)] --- # Deploy Jupiter on Kubernetes .exercise[ - We will follow the procedure provided here: https://zonca.github.io/2017/12/scalable-jupyterhub-kubernetes-jetstream.html ] .debug[[kube/advanced.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/advanced.md)] --- class: pic .interstitial[] --- name: toc-advanced-scheduling-with-kubernetes class: title Advanced scheduling with Kubernetes .nav[ [Section précédente](#toc-deploy-jupiter-on-kubernetes) | [Retour table des matières](#toc-chapter-3) | [Section suivante](#toc-autoscaling-with-kubernetes) ] .debug[(automatically generated title slide)] --- --- # Advanced scheduling with Kubernetes .exercise[ - We will follow the procedure provided here: https://github.com/RyaxTech/kube-tutorial#4-activate-an-advanced-scheduling-policy-and-test-its-usage ] .debug[[kube/advanced.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/advanced.md)] --- --- class: pic .interstitial[] --- name: toc-autoscaling-with-kubernetes class: title Autoscaling with Kubernetes .nav[ [Section précédente](#toc-advanced-scheduling-with-kubernetes) | [Retour table des matières](#toc-chapter-3) | [Section suivante](#toc-big-data-analytics-on-kubernetes) ] .debug[(automatically generated title slide)] --- # Autoscaling with Kubernetes .exercise[ - We will follow the procedure provided here: https://github.com/RyaxTech/kube-tutorial#6-enable-and-use-pod-autoscaling ] .debug[[kube/advanced.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/advanced.md)] --- --- class: pic .interstitial[] --- name: toc-big-data-analytics-on-kubernetes class: title Big Data analytics on Kubernetes .nav[ [Section précédente](#toc-autoscaling-with-kubernetes) | [Retour table des matières](#toc-chapter-3) | [Section suivante](#toc-managing-configuration) ] .debug[(automatically generated title slide)] --- # Big Data analytics on Kubernetes .exercise[ - We will follow the procedure provided here: https://github.com/RyaxTech/kube-tutorial#3-execute-big-data-job-with-spark-on-the-kubernetes-cluster ] .debug[[kube/advanced.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/kube/advanced.md)] --- class: pic .interstitial[] --- name: toc-managing-configuration class: title Managing configuration .nav[ [Section précédente](#toc-big-data-analytics-on-kubernetes) | [Retour table des matières](#toc-chapter-3) | [Section suivante](#toc-extending-the-kubernetes-api) ] .debug[(automatically generated title slide)] --- # Managing configuration - Some applications need to be configured (obviously!) - There are many ways for our code to pick up configuration: - command-line arguments - environment variables - configuration files - configuration servers (getting configuration from a database, an API...) - ... and more (because programmers can be very creative!) - How can we do these things with containers and Kubernetes? .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Passing configuration to containers - There are many ways to pass configuration to code running in a container: - baking it in a custom image - command-line arguments - environment variables - injecting configuration files - exposing it over the Kubernetes API - configuration servers - Let's review these different strategies! .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Baking custom images - Put the configuration in the image (it can be in a configuration file, but also `ENV` or `CMD` actions) - It's easy! It's simple! - Unfortunately, it also has downsides: - multiplication of images - different images for dev, staging, prod ... - minor reconfigurations require a whole build/push/pull cycle - Avoid doing it unless you don't have the time to figure out other options .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Command-line arguments - Pass options to `args` array in the container specification - Example ([source](https://github.com/coreos/pods/blob/master/kubernetes.yaml#L29)): ```yaml args: - "--data-dir=/var/lib/etcd" - "--advertise-client-urls=http://127.0.0.1:2379" - "--listen-client-urls=http://127.0.0.1:2379" - "--listen-peer-urls=http://127.0.0.1:2380" - "--name=etcd" ``` - The options can be passed directly to the program that we run ... ... or to a wrapper script that will use them to e.g. generate a config file .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Command-line arguments, pros & cons - Works great when options are passed directly to the running program (otherwise, a wrapper script can work around the issue) - Works great when there aren't too many parameters (to avoid a 20-lines `args` array) - Requires documentation and/or understanding of the underlying program ("which parameters and flags do I need, again?") - Well-suited for mandatory parameters (without default values) - Not ideal when we need to pass a real configuration file anyway .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Environment variables - Pass options through the `env` map in the container specification - Example: ```yaml env: - name: ADMIN_PORT value: "8080" - name: ADMIN_AUTH value: Basic - name: ADMIN_CRED value: "admin:0pensesame!" ``` .warning[`value` must be a string! Make sure that numbers and fancy strings are quoted.] 🤔 Why this weird `{name: xxx, value: yyy}` scheme? It will be revealed soon! .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## The downward API - In the previous example, environment variables have fixed values - We can also use a mechanism called the *downward API* - The downward API allows to expose pod or container information - either through special files (we won't show that for now) - or through environment variables - The value of these environment variables is computed when the container is started - Remember: environment variables won't (can't) change after container start - Let's see a few concrete examples! .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Exposing the pod's namespace ```yaml - name: MY_POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace ``` - Useful to generate FQDN of services (in some contexts, a short name is not enough) - For instance, the two commands should be equivalent: ``` curl api-backend curl api-backend.$MY_POD_NAMESPACE.svc.cluster.local ``` .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Exposing the pod's IP address ```yaml - name: MY_POD_IP valueFrom: fieldRef: fieldPath: status.podIP ``` - Useful if we need to know our IP address (we could also read it from `eth0`, but this is more solid) .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Exposing the container's resource limits ```yaml - name: MY_MEM_LIMIT valueFrom: resourceFieldRef: containerName: test-container resource: limits.memory ``` - Useful for runtimes where memory is garbage collected - Example: the JVM (the memory available to the JVM should be set with the `-Xmx ` flag) - Best practice: set a memory limit, and pass it to the runtime (see [this blog post](https://very-serio.us/2017/12/05/running-jvms-in-kubernetes/) for a detailed example) .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## More about the downward API - [This documentation page](https://kubernetes.io/docs/tasks/inject-data-application/environment-variable-expose-pod-information/) tells more about these environment variables - And [this one](https://kubernetes.io/docs/tasks/inject-data-application/downward-api-volume-expose-pod-information/) explains the other way to use the downward API (through files that get created in the container filesystem) .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Environment variables, pros and cons - Works great when the running program expects these variables - Works great for optional parameters with reasonable defaults (since the container image can provide these defaults) - Sort of auto-documented (we can see which environment variables are defined in the image, and their values) - Can be (ab)used with longer values ... - ... You *can* put an entire Tomcat configuration file in an environment ... - ... But *should* you? (Do it if you really need to, we're not judging! But we'll see better ways.) .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Injecting configuration files - Sometimes, there is no way around it: we need to inject a full config file - Kubernetes provides a mechanism for that purpose: `configmaps` - A configmap is a Kubernetes resource that exists in a namespace - Conceptually, it's a key/value map (values are arbitrary strings) - We can think about them in (at least) two different ways: - as holding entire configuration file(s) - as holding individual configuration parameters *Note: to hold sensitive information, we can use "Secrets", which are another type of resource behaving very much like configmaps. We'll cover them just after!* .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Configmaps storing entire files - In this case, each key/value pair corresponds to a configuration file - Key = name of the file - Value = content of the file - There can be one key/value pair, or as many as necessary (for complex apps with multiple configuration files) - Examples: ``` # Create a configmap with a single key, "app.conf" kubectl create configmap my-app-config --from-file=app.conf # Create a configmap with a single key, "app.conf" but another file kubectl create configmap my-app-config --from-file=app.conf=app-prod.conf # Create a configmap with multiple keys (one per file in the config.d directory) kubectl create configmap my-app-config --from-file=config.d/ ``` .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Configmaps storing individual parameters - In this case, each key/value pair corresponds to a parameter - Key = name of the parameter - Value = value of the parameter - Examples: ``` # Create a configmap with two keys kubectl create cm my-app-config \ --from-literal=foreground=red \ --from-literal=background=blue # Create a configmap from a file containing key=val pairs kubectl create cm my-app-config \ --from-env-file=app.conf ``` .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Exposing configmaps to containers - Configmaps can be exposed as plain files in the filesystem of a container - this is achieved by declaring a volume and mounting it in the container - this is particularly effective for configmaps containing whole files - Configmaps can be exposed as environment variables in the container - this is achieved with the downward API - this is particularly effective for configmaps containing individual parameters - Let's see how to do both! .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Passing a configuration file with a configmap - We will start a load balancer powered by HAProxy - We will use the [official `haproxy` image](https://hub.docker.com/_/haproxy/) - It expects to find its configuration in `/usr/local/etc/haproxy/haproxy.cfg` - We will provide a simple HAproxy configuration, `k8s/haproxy.cfg` - It listens on port 80, and load balances connections between IBM and Google .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Creating the configmap .exercise[ - Go to the `k8s` directory in the repository: ```bash cd ~/container.training/k8s ``` - Create a configmap named `haproxy` and holding the configuration file: ```bash kubectl create configmap haproxy --from-file=haproxy.cfg ``` - Check what our configmap looks like: ```bash kubectl get configmap haproxy -o yaml ``` ] .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Using the configmap We are going to use the following pod definition: ```yaml apiVersion: v1 kind: Pod metadata: name: haproxy spec: volumes: - name: config configMap: name: haproxy containers: - name: haproxy image: haproxy volumeMounts: - name: config mountPath: /usr/local/etc/haproxy/ ``` .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Using the configmap - The resource definition from the previous slide is in `k8s/haproxy.yaml` .exercise[ - Create the HAProxy pod: ```bash kubectl apply -f ~/container.training/k8s/haproxy.yaml ``` <!-- ```hide kubectl wait pod haproxy --for condition=ready``` --> - Check the IP address allocated to the pod: ```bash kubectl get pod haproxy -o wide IP=$(kubectl get pod haproxy -o json | jq -r .status.podIP) ``` ] .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Testing our load balancer - The load balancer will send: - half of the connections to Google - the other half to IBM .exercise[ - Access the load balancer a few times: ```bash curl $IP curl $IP curl $IP ``` ] We should see connections served by Google, and others served by IBM. <br/> (Each server sends us a redirect page. Look at the URL that they send us to!) .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Exposing configmaps with the downward API - We are going to run a Docker registry on a custom port - By default, the registry listens on port 5000 - This can be changed by setting environment variable `REGISTRY_HTTP_ADDR` - We are going to store the port number in a configmap - Then we will expose that configmap to a container environment variable .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Creating the configmap .exercise[ - Our configmap will have a single key, `http.addr`: ```bash kubectl create configmap registry --from-literal=http.addr=0.0.0.0:80 ``` - Check our configmap: ```bash kubectl get configmap registry -o yaml ``` ] .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Using the configmap We are going to use the following pod definition: ```yaml apiVersion: v1 kind: Pod metadata: name: registry spec: containers: - name: registry image: registry env: - name: REGISTRY_HTTP_ADDR valueFrom: configMapKeyRef: name: registry key: http.addr ``` .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Using the configmap - The resource definition from the previous slide is in `k8s/registry.yaml` .exercise[ - Create the registry pod: ```bash kubectl apply -f ~/container.training/k8s/registry.yaml ``` <!-- ```hide kubectl wait pod registry --for condition=ready``` --> - Check the IP address allocated to the pod: ```bash kubectl get pod registry -o wide IP=$(kubectl get pod registry -o json | jq -r .status.podIP) ``` - Confirm that the registry is available on port 80: ```bash curl $IP/v2/_catalog ``` ] .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Passwords, tokens, sensitive information - For sensitive information, there is another special resource: *Secrets* - Secrets and Configmaps work almost the same way (we'll expose the differences on the next slide) - The *intent* is different, though: *"You should use secrets for things which are actually secret like API keys, credentials, etc., and use config map for not-secret configuration data."* *"In the future there will likely be some differentiators for secrets like rotation or support for backing the secret API w/ HSMs, etc."* (Source: [the author of both features](https://stackoverflow.com/a/36925553/580281 )) .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- ## Differences between configmaps and secrets - Secrets are base64-encoded when shown with `kubectl get secrets -o yaml` - keep in mind that this is just *encoding*, not *encryption* - it is very easy to [automatically extract and decode secrets](https://medium.com/@mveritym/decoding-kubernetes-secrets-60deed7a96a3) - [Secrets can be encrypted at rest](https://kubernetes.io/docs/tasks/administer-cluster/encrypt-data/) - With RBAC, we can authorize a user to access configmaps, but not secrets (since they are two different kinds of resources) .debug[[k8s/configuration.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/configuration.md)] --- class: pic .interstitial[] --- name: toc-extending-the-kubernetes-api class: title Extending the Kubernetes API .nav[ [Section précédente](#toc-managing-configuration) | [Retour table des matières](#toc-chapter-3) | [Section suivante](#toc-next-steps) ] .debug[(automatically generated title slide)] --- # Extending the Kubernetes API There are multiple ways to extend the Kubernetes API. We are going to cover: - Custom Resource Definitions (CRDs) - Admission Webhooks .debug[[k8s/extending-api.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/extending-api.md)] --- ## Revisiting the API server - The Kubernetes API server is a central point of the control plane (everything connects to it: controller manager, scheduler, kubelets) - Almost everything in Kubernetes is materialized by a resource - Resources have a type (or "kind") (similar to strongly typed languages) - We can see existing types with `kubectl api-resources` - We can list resources of a given type with `kubectl get <type>` .debug[[k8s/extending-api.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/extending-api.md)] --- ## Creating new types - We can create new types with Custom Resource Definitions (CRDs) - CRDs are created dynamically (without recompiling or restarting the API server) - CRDs themselves are resources: - we can create a new type with `kubectl create` and some YAML - we can see all our custom types with `kubectl get crds` - After we create a CRD, the new type works just like built-in types .debug[[k8s/extending-api.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/extending-api.md)] --- ## What can we do with CRDs? There are many possibilities! - *Operators* encapsulate complex sets of resources (e.g.: a PostgreSQL replicated cluster; an etcd cluster... <br/> see [awesome operators](https://github.com/operator-framework/awesome-operators) and [OperatorHub](https://operatorhub.io/) to find more) - Custom use-cases like [gitkube](https://gitkube.sh/) - creates a new custom type, `Remote`, exposing a git+ssh server - deploy by pushing YAML or Helm Charts to that remote - Replacing built-in types with CRDs (see [this lightning talk by Tim Hockin](https://www.youtube.com/watch?v=ji0FWzFwNhA&index=2&list=PLj6h78yzYM2PZf9eA7bhWnIh_mK1vyOfU)) .debug[[k8s/extending-api.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/extending-api.md)] --- ## Little details - By default, CRDs are not *validated* (we can put anything we want in the `spec`) - When creating a CRD, we can pass an OpenAPI v3 schema (BETA!) (which will then be used to validate resources) - Generally, when creating a CRD, we also want to run a *controller* (otherwise nothing will happen when we create resources of that type) - The controller will typically *watch* our custom resources (and take action when they are created/updated) * Examples: [YAML to install the gitkube CRD](https://storage.googleapis.com/gitkube/gitkube-setup-stable.yaml), [YAML to install a redis operator CRD](https://github.com/amaizfinance/redis-operator/blob/master/deploy/crds/k8s_v1alpha1_redis_crd.yaml) * .debug[[k8s/extending-api.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/extending-api.md)] --- ## Service catalog - *Service catalog* is another extension mechanism - It's not extending the Kubernetes API strictly speaking (but it still provides new features!) - It doesn't create new types; it uses: - ClusterServiceBroker - ClusterServiceClass - ClusterServicePlan - ServiceInstance - ServiceBinding - It uses the Open service broker API .debug[[k8s/extending-api.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/extending-api.md)] --- ## Admission controllers - When a Pod is created, it is associated to a ServiceAccount (even if we did not specify one explicitly) - That ServiceAccount was added on the fly by an *admission controller* (specifically, a *mutating admission controller*) - Admission controllers sit on the API request path (see the cool diagram on next slide, courtesy of Banzai Cloud) .debug[[k8s/extending-api.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/extending-api.md)] --- class: pic  .debug[[k8s/extending-api.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/extending-api.md)] --- ## Admission controllers - *Validating* admission controllers can accept/reject the API call - *Mutating* admission controllers can modify the API request payload - Both types can also trigger additional actions (e.g. automatically create a Namespace if it doesn't exist) - There are a number of built-in admission controllers (see [documentation](https://kubernetes.io/docs/reference/access-authn-authz/admission-controllers/#what-does-each-admission-controller-do) for a list) - But we can also define our own! .debug[[k8s/extending-api.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/extending-api.md)] --- ## Admission Webhooks - We can setup *admission webhooks* to extend the behavior of the API server - The API server will submit incoming API requests to these webhooks - These webhooks can be *validating* or *mutating* - Webhooks can be setup dynamically (without restarting the API server) - To setup a dynamic admission webhook, we create a special resource: a `ValidatingWebhookConfiguration` or a `MutatingWebhookConfiguration` - These resources are created and managed like other resources (i.e. `kubectl create`, `kubectl get` ...) .debug[[k8s/extending-api.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/extending-api.md)] --- ## Webhook Configuration - A ValidatingWebhookConfiguration or MutatingWebhookConfiguration contains: - the address of the webhook - the authentication information to use with the webhook - a list of rules - The rules indicate for which objects and actions the webhook is triggered (to avoid e.g. triggering webhooks when setting up webhooks) .debug[[k8s/extending-api.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/extending-api.md)] --- ## (Ab)using the API server - If we need to store something "safely" (as in: in etcd), we can use CRDs - This gives us primitives to read/write/list objects (and optionally validate them) - The Kubernetes API server can run on its own (without the scheduler, controller manager, and kubelets) - By loading CRDs, we can have it manage totally different objects (unrelated to containers, clusters, etc.) .debug[[k8s/extending-api.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/extending-api.md)] --- ## Documentation - [Custom Resource Definitions: when to use them](https://kubernetes.io/docs/concepts/extend-kubernetes/api-extension/custom-resources/) - [Custom Resources Definitions: how to use them](https://kubernetes.io/docs/tasks/access-kubernetes-api/custom-resources/custom-resource-definitions/) - [Service Catalog](https://kubernetes.io/docs/concepts/extend-kubernetes/service-catalog/) - [Built-in Admission Controllers](https://kubernetes.io/docs/reference/access-authn-authz/admission-controllers/) - [Dynamic Admission Controllers](https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/) .debug[[k8s/extending-api.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/extending-api.md)] --- ## Self-hosting our registry *Note: this section shows how to run the Docker open source registry and use it to ship images on our cluster. While this method works fine, we recommend that you consider using one of the hosted, free automated build services instead. It will be much easier!* *If you need to run a registry on premises, this section gives you a starting point, but you will need to make a lot of changes so that the registry is secured, highly available, and so that your build pipeline is automated.* .debug[[k8s/buildshiprun-selfhosted.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/buildshiprun-selfhosted.md)] --- ## Using the open source registry - We need to run a `registry` container - It will store images and layers to the local filesystem <br/>(but you can add a config file to use S3, Swift, etc.) - Docker *requires* TLS when communicating with the registry - unless for registries on `127.0.0.0/8` (i.e. `localhost`) - or with the Engine flag `--insecure-registry` - Our strategy: publish the registry container on a NodePort, <br/>so that it's available through `127.0.0.1:xxxxx` on each node .debug[[k8s/buildshiprun-selfhosted.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/buildshiprun-selfhosted.md)] --- ## Deploying a self-hosted registry - We will deploy a registry container, and expose it with a NodePort .exercise[ - Create the registry service: ```bash kubectl create deployment registry --image=registry ``` - Expose it on a NodePort: ```bash kubectl expose deploy/registry --port=5000 --type=NodePort ``` ] .debug[[k8s/buildshiprun-selfhosted.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/buildshiprun-selfhosted.md)] --- ## Connecting to our registry - We need to find out which port has been allocated .exercise[ - View the service details: ```bash kubectl describe svc/registry ``` - Get the port number programmatically: ```bash NODEPORT=$(kubectl get svc/registry -o json | jq .spec.ports[0].nodePort) REGISTRY=127.0.0.1:$NODEPORT ``` ] .debug[[k8s/buildshiprun-selfhosted.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/buildshiprun-selfhosted.md)] --- ## Testing our registry - A convenient Docker registry API route to remember is `/v2/_catalog` .exercise[ <!-- ```hide kubectl wait deploy/registry --for condition=available```--> - View the repositories currently held in our registry: ```bash curl $REGISTRY/v2/_catalog ``` ] -- We should see: ```json {"repositories":[]} ``` .debug[[k8s/buildshiprun-selfhosted.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/buildshiprun-selfhosted.md)] --- ## Testing our local registry - We can retag a small image, and push it to the registry .exercise[ - Make sure we have the busybox image, and retag it: ```bash docker pull busybox docker tag busybox $REGISTRY/busybox ``` - Push it: ```bash docker push $REGISTRY/busybox ``` ] .debug[[k8s/buildshiprun-selfhosted.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/buildshiprun-selfhosted.md)] --- ## Checking again what's on our local registry - Let's use the same endpoint as before .exercise[ - Ensure that our busybox image is now in the local registry: ```bash curl $REGISTRY/v2/_catalog ``` ] The curl command should now output: ```json {"repositories":["busybox"]} ``` .debug[[k8s/buildshiprun-selfhosted.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/buildshiprun-selfhosted.md)] --- ## Building and pushing our images - We are going to use a convenient feature of Docker Compose .exercise[ - Go to the `stacks` directory: ```bash cd ~/container.training/stacks ``` - Build and push the images: ```bash export REGISTRY export TAG=v0.1 docker-compose -f dockercoins.yml build docker-compose -f dockercoins.yml push ``` ] Let's have a look at the `dockercoins.yml` file while this is building and pushing. .debug[[k8s/buildshiprun-selfhosted.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/buildshiprun-selfhosted.md)] --- ```yaml version: "3" services: rng: build: dockercoins/rng image: ${REGISTRY-127.0.0.1:5000}/rng:${TAG-latest} deploy: mode: global ... redis: image: redis ... worker: build: dockercoins/worker image: ${REGISTRY-127.0.0.1:5000}/worker:${TAG-latest} ... deploy: replicas: 10 ``` .warning[Just in case you were wondering ... Docker "services" are not Kubernetes "services".] .debug[[k8s/buildshiprun-selfhosted.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/buildshiprun-selfhosted.md)] --- class: extra-details ## Avoiding the `latest` tag .warning[Make sure that you've set the `TAG` variable properly!] - If you don't, the tag will default to `latest` - The problem with `latest`: nobody knows what it points to! - the latest commit in the repo? - the latest commit in some branch? (Which one?) - the latest tag? - some random version pushed by a random team member? - If you keep pushing the `latest` tag, how do you roll back? - Image tags should be meaningful, i.e. correspond to code branches, tags, or hashes .debug[[k8s/buildshiprun-selfhosted.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/buildshiprun-selfhosted.md)] --- ## Checking the content of the registry - All our images should now be in the registry .exercise[ - Re-run the same `curl` command as earlier: ```bash curl $REGISTRY/v2/_catalog ``` ] *In these slides, all the commands to deploy DockerCoins will use a $REGISTRY environment variable, so that we can quickly switch from the self-hosted registry to pre-built images hosted on the Docker Hub. So make sure that this $REGISTRY variable is set correctly when running the exercises!* .debug[[k8s/buildshiprun-selfhosted.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/buildshiprun-selfhosted.md)] --- class: pic .interstitial[] --- name: toc-next-steps class: title Next steps .nav[ [Section précédente](#toc-extending-the-kubernetes-api) | [Retour table des matières](#toc-chapter-4) | [Section suivante](#toc-links-and-resources) ] .debug[(automatically generated title slide)] --- # Next steps *Alright, how do I get started and containerize my apps?* -- Suggested containerization checklist: .checklist[ - write a Dockerfile for one service in one app - write Dockerfiles for the other (buildable) services - write a Compose file for that whole app - make sure that devs are empowered to run the app in containers - set up automated builds of container images from the code repo - set up a CI pipeline using these container images - set up a CD pipeline (for staging/QA) using these images ] And *then* it is time to look at orchestration! .debug[[k8s/whatsnext.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/whatsnext.md)] --- ## Options for our first production cluster - Get a managed cluster from a major cloud provider (AKS, EKS, GKE...) (price: $, difficulty: medium) - Hire someone to deploy it for us (price: $$, difficulty: easy) - Do it ourselves (price: $-$$$, difficulty: hard) .debug[[k8s/whatsnext.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/whatsnext.md)] --- ## One big cluster vs. multiple small ones - Yes, it is possible to have prod+dev in a single cluster (and implement good isolation and security with RBAC, network policies...) - But it is not a good idea to do that for our first deployment - Start with a production cluster + at least a test cluster - Implement and check RBAC and isolation on the test cluster (e.g. deploy multiple test versions side-by-side) - Make sure that all our devs have usable dev clusters (whether it's a local minikube or a full-blown multi-node cluster) .debug[[k8s/whatsnext.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/whatsnext.md)] --- ## Namespaces - Namespaces let you run multiple identical stacks side by side - Two namespaces (e.g. `blue` and `green`) can each have their own `redis` service - Each of the two `redis` services has its own `ClusterIP` - CoreDNS creates two entries, mapping to these two `ClusterIP` addresses: `redis.blue.svc.cluster.local` and `redis.green.svc.cluster.local` - Pods in the `blue` namespace get a *search suffix* of `blue.svc.cluster.local` - As a result, resolving `redis` from a pod in the `blue` namespace yields the "local" `redis` .warning[This does not provide *isolation*! That would be the job of network policies.] .debug[[k8s/whatsnext.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/whatsnext.md)] --- ## Relevant sections - [Namespaces](kube-selfpaced.yml.html#toc-namespaces) - [Network Policies](kube-selfpaced.yml.html#toc-network-policies) - [Role-Based Access Control](kube-selfpaced.yml.html#toc-authentication-and-authorization) (covers permissions model, user and service accounts management ...) .debug[[k8s/whatsnext.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/whatsnext.md)] --- ## Stateful services (databases etc.) - As a first step, it is wiser to keep stateful services *outside* of the cluster - Exposing them to pods can be done with multiple solutions: - `ExternalName` services <br/> (`redis.blue.svc.cluster.local` will be a `CNAME` record) - `ClusterIP` services with explicit `Endpoints` <br/> (instead of letting Kubernetes generate the endpoints from a selector) - Ambassador services <br/> (application-level proxies that can provide credentials injection and more) .debug[[k8s/whatsnext.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/whatsnext.md)] --- ## Stateful services (second take) - If we want to host stateful services on Kubernetes, we can use: - a storage provider - persistent volumes, persistent volume claims - stateful sets - Good questions to ask: - what's the *operational cost* of running this service ourselves? - what do we gain by deploying this stateful service on Kubernetes? - Relevant sections: [Volumes](kube-selfpaced.yml.html#toc-volumes) | [Stateful Sets](kube-selfpaced.yml.html#toc-stateful-sets) | [Persistent Volumes](kube-selfpaced.yml.html#toc-highly-available-persistent-volumes) .debug[[k8s/whatsnext.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/whatsnext.md)] --- ## HTTP traffic handling - *Services* are layer 4 constructs - HTTP is a layer 7 protocol - It is handled by *ingresses* (a different resource kind) - *Ingresses* allow: - virtual host routing - session stickiness - URI mapping - and much more! - [This section](kube-selfpaced.yml.html#toc-exposing-http-services-with-ingress-resources) shows how to expose multiple HTTP apps using [Træfik](https://docs.traefik.io/user-guide/kubernetes/) .debug[[k8s/whatsnext.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/whatsnext.md)] --- ## Logging - Logging is delegated to the container engine - Logs are exposed through the API - Logs are also accessible through local files (`/var/log/containers`) - Log shipping to a central platform is usually done through these files (e.g. with an agent bind-mounting the log directory) - [This section](kube-selfpaced.yml.html#toc-centralized-logging) shows how to do that with [Fluentd](https://docs.fluentd.org/v0.12/articles/kubernetes-fluentd) and the EFK stack .debug[[k8s/whatsnext.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/whatsnext.md)] --- ## Metrics - The kubelet embeds [cAdvisor](https://github.com/google/cadvisor), which exposes container metrics (cAdvisor might be separated in the future for more flexibility) - It is a good idea to start with [Prometheus](https://prometheus.io/) (even if you end up using something else) - Starting from Kubernetes 1.8, we can use the [Metrics API](https://kubernetes.io/docs/tasks/debug-application-cluster/core-metrics-pipeline/) - [Heapster](https://github.com/kubernetes/heapster) was a popular add-on (but is being [deprecated](https://github.com/kubernetes/heapster/blob/master/docs/deprecation.md) starting with Kubernetes 1.11) .debug[[k8s/whatsnext.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/whatsnext.md)] --- ## Managing the configuration of our applications - Two constructs are particularly useful: secrets and config maps - They allow to expose arbitrary information to our containers - **Avoid** storing configuration in container images (There are some exceptions to that rule, but it's generally a Bad Idea) - **Never** store sensitive information in container images (It's the container equivalent of the password on a post-it note on your screen) - [This section](kube-selfpaced.yml.html#toc-managing-configuration) shows how to manage app config with config maps (among others) .debug[[k8s/whatsnext.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/whatsnext.md)] --- ## Managing stack deployments - The best deployment tool will vary, depending on: - the size and complexity of your stack(s) - how often you change it (i.e. add/remove components) - the size and skills of your team - A few examples: - shell scripts invoking `kubectl` - YAML resources descriptions committed to a repo - [Helm](https://github.com/kubernetes/helm) (~package manager) - [Spinnaker](https://www.spinnaker.io/) (Netflix' CD platform) - [Brigade](https://brigade.sh/) (event-driven scripting; no YAML) .debug[[k8s/whatsnext.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/whatsnext.md)] --- .debug[[k8s/whatsnext.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/whatsnext.md)] --- class: pic .interstitial[] --- name: toc-links-and-resources class: title Links and resources .nav[ [Section précédente](#toc-next-steps) | [Retour table des matières](#toc-chapter-4) | [Section suivante](#toc-) ] .debug[(automatically generated title slide)] --- # Links and resources All things Kubernetes: - [Kubernetes Community](https://kubernetes.io/community/) - Slack, Google Groups, meetups - [Kubernetes on StackOverflow](https://stackoverflow.com/questions/tagged/kubernetes) - [Play With Kubernetes Hands-On Labs](https://medium.com/@marcosnils/introducing-pwk-play-with-k8s-159fcfeb787b) All things Docker: - [Docker documentation](http://docs.docker.com/) - [Docker Hub](https://hub.docker.com) - [Docker on StackOverflow](https://stackoverflow.com/questions/tagged/docker) - [Play With Docker Hands-On Labs](http://training.play-with-docker.com/) Everything else: - [Local meetups](https://www.meetup.com/) .footnote[These slides (and future updates) are on → http://container.training/] .debug[[k8s/links.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/k8s/links.md)] --- class: title, self-paced Thank you! .debug[[shared/thankyou.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/shared/thankyou.md)] --- class: title, in-person That's all, folks! <br/> Questions?  .debug[[shared/thankyou.md](https://github.com/RyaxTech/kube.training.git/tree/k8s_metropole_de_lyon/slides/shared/thankyou.md)]